Wearable Depth-Sensing Projection System Makes Any Surface Capable of Multitouch Interaction Researchers From Microsoft Research and Carnegie Mellon Create OmniTouch Technology

Byron SpiceMonday, October 17, 2011Print this page.

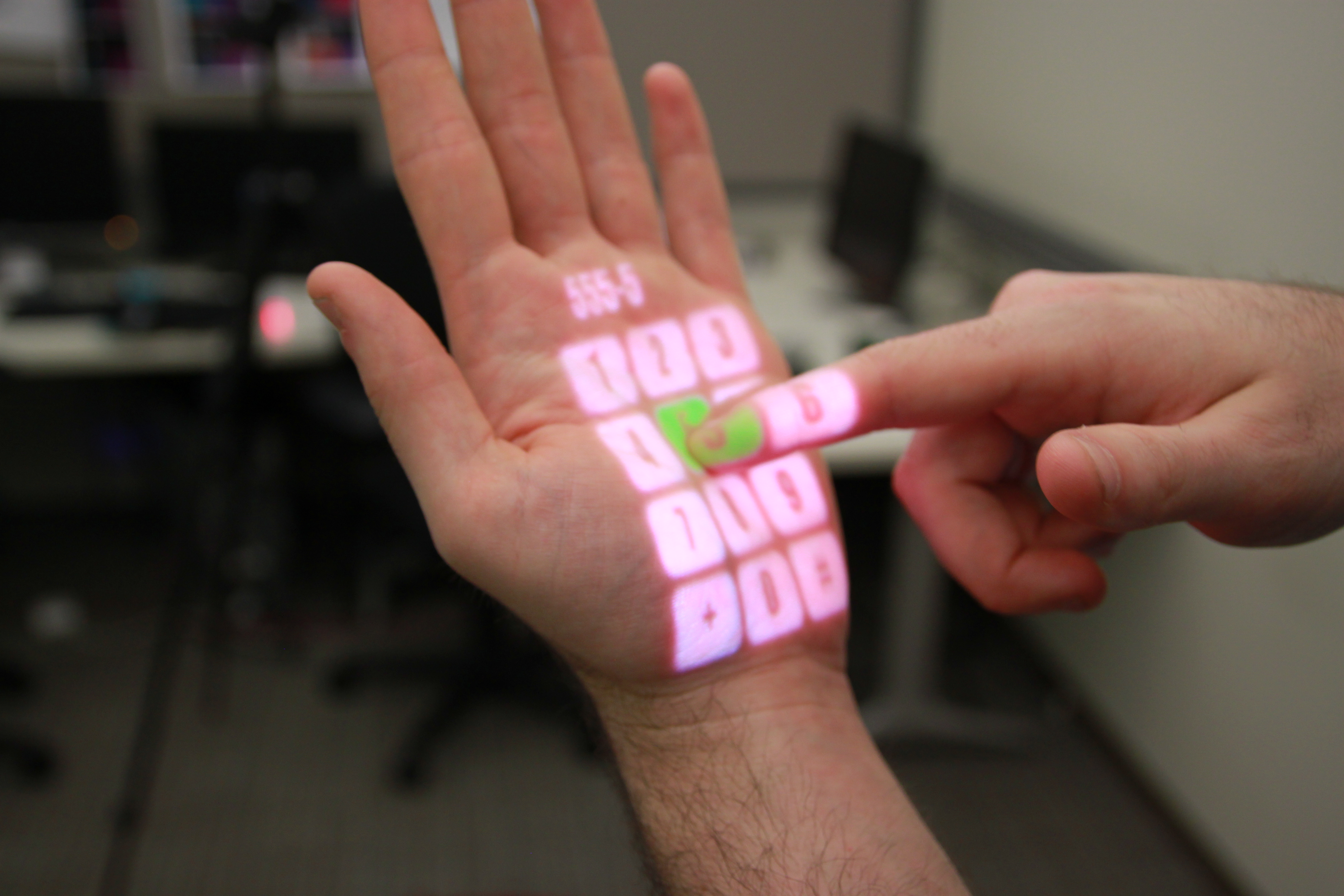

PITTSBURGH-OmniTouch,a wearable projection system developed by researchers at Microsoft Research and CarnegieMellon University, enables users to turn pads of paper, walls or even their ownhands, arms and legs into graphical, interactive surfaces.

OmniTouch employs a depth-sensing camera,similar to the Microsoft Kinect, to track the user's fingers on everydaysurfaces. This allows users to control interactive applications by tapping ordragging their fingers, much as they would with touchscreens found on smartphonesor tablet computers. The projector can superimpose keyboards, keypads and othercontrols onto any surface, automatically adjusting for the surface's shape andorientation to minimize distortion of the projected images.

"It's conceivable that anything youcan do on today's mobile devices, you will be able to do on your hand usingOmniTouch," said Chris Harrison, a Ph.D. student in Carnegie Mellon's Human-Computer Interaction Institute. Thepalm of the hand could be used as a phone keypad, or as a tablet for jottingdown brief notes. Maps projected onto a wall could be panned and zoomed withthe same finger motions that work with a conventional multitouch screen.

Harrison was an intern at MicrosoftResearch when he developed OmniTouch in collaboration with Microsoft Research'sHrvoje Benko and Andrew D. Wilson. Harrison will describe the technology onWednesday (Oct. 19) at the Associationfor Computing Machinery's Symposium on User Interface Software and Technology(UIST) in Santa Barbara, Calif.

A video demonstrating OmniTouch andadditional downloadable media are available at: http://www.chrisharrison.net/index.php/Research/OmniTouch

The OmniTouch device includes a short-rangedepth camera and laser pico-projector and is mounted on a user's shoulder. ButHarrison said the device ultimately could be the size of a deck of cards, oreven a matchbox, so that it could fit in a pocket, be easily wearable, or beintegrated into future handheld devices.

"With OmniTouch, we wanted to capitalize on the tremendous surface areathe real world provides," said Benko, a researcher in Microsoft Research'sAdaptive Systems and Interaction group. "We see this work as an evolutionarystep in a larger effort at Microsoft Research to investigate the unconventionaluse of touch and gesture in devices to extend our vision of ubiquitouscomputing even further. Being able to collaborate openly with academics andresearchers like Chris on such work is critical to our organization's abilityto do great research - and to advancing the state of the art of computer userinterfaces in general."

Harrison previously worked withMicrosoft Research to develop Skinput, a technology thatused bioacoustic sensors to detect finger taps on a person's hands or forearm.Skinput thus enabled users to control smartphones or other compact computingdevices.

The optical sensing used inOmniTouch, by contrast, allows a wide range of interactions, similar to thecapabilities of a computer mouse or touchscreen. It can track three-dimensionalmotion on the hand or other commonplace surfaces, and can sense whether fingersare "clicked" or hovering. What's more, OmniTouch does not require calibration- users can simply wear the device and immediately use its features. Noinstrumentation of the environment is needed; only the wearable device isneeded.

The Human-Computer InteractionInstitute is part of Carnegie Mellon's acclaimed School of Computer Science. Follow the school on Twitter @SCSatCMU.

"

Byron Spice | 412-268-9068 | bspice@cs.cmu.edu