CMU, Apple Team Improves iOS App Accessibility SCS Researchers Part of Best Paper Effort at CHI 2021

Aaron AupperleeWednesday, June 16, 2021Print this page.

A team at Apple analyzed nearly 78,000 screenshots from more than 4,000 apps to improve the screen reader function on its mobile devices. The result was Screen Recognition, a tool that uses machine learning and computer vision to automatically detect and provide content readable by VoiceOver for apps that would otherwise not be accessible.

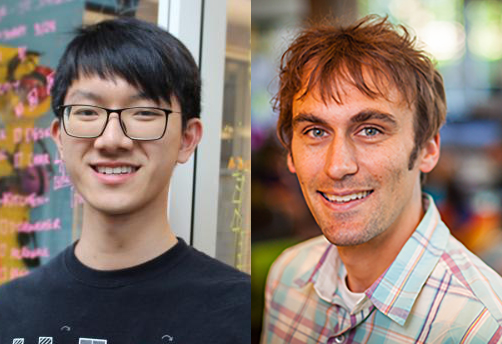

Jason Wu, a Ph.D. student in Carnegie Mellon University's Human-Computer Interaction Institute (HCII), was part of the team, whose work, "Screen Recognition: Creating Accessibility Metadata for Mobile Applications From Pixels," won a Best Paper award at the recent Association for Computing Machinery (ACM) Computer-Human Interaction (CHI) conference. His advisor, Jeffrey Bigham, an associate professor in HCII and the Language Technologies Institute and head of the Human-Centered Machine Learning Group at Apple, was also among the paper's authors.

Apple's VoiceOver uses metadata supplied by ad developers that describes user interface components. Without this metadata, VoiceOver may not be able to read a section of text aloud; describe a photo; or assist with using a button, slider or toggle. This could leave an entire app inaccessible to a user with a disability.

"We saw an opportunity to apply machine learning and computer vision techniques to automatically generate this metadata for many apps from their visual appearance, which greatly improved the VoiceOver experience for previously inaccessible apps," Wu said.

The team first collected and annotated screen shots to train the Screen Recognition model to identify user interface elements. They then worked with blind quality assurance engineers to improve the way the screen reader relayed information. Finally, the team grouped items, such as photos and their subtext; created a way for users to know if an item was clickable; and enabled elements on the screen to be read in a logical order.

Users who tested Screen Recognition found it made apps more accessible. One person told the researchers that they could play a game with Screen Recognition enabled that had been completely unusable without it.

"Guess who has a new high score? I am in AWE! This is incredible," the person wrote.

Screen Recognition was released as a feature in iOS 14 last September, and the team continues to improve on it. The model created for Screen Recognition could also be used to help developers create apps that are more accessible to begin with.

Read more about the work behind Screen Recognition and the team's hopes for the feature's future in a post on the Machine Learning Research at Apple page.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu